Search engines are no longer just digital libraries; they have evolved into thinking assistants. For years, we optimized for keywords through SEO (Search Engine Optimization). Then, with the shift toward direct answers, we optimized for questions with AEO (Answer Engine Optimization). And now, with the rise of Large Language Models (LLMs), we must optimize for synthesis through GEO (Generative Engine Optimization).

As an engineer, I view this not as the “death of SEO,” but as an evolution of data processing layers. Here is a technical breakdown of how these three architectures coexist and how to build a future-proof strategy.

Table of Contents

Technical Comparison: The Data Processing Stack

| Feature | SEO (Traditional) | AEO (Voice/Snippets) | GEO (AI/LLMs) |

| Input Data | Keywords & Backlinks | Structured Content (Q&A) | Entities & Facts |

| Process | Ranking Algorithm | Information Extraction | Content Synthesis (RAG) |

| Output | List of Blue Links | Single Direct Answer | Generated Paragraphs |

| Key Optimization | Meta Tags, H1-H3, Speed | Conciseness, Schema.org | Authority, Data Density |

| Success Metric | Traffic (Clicks) | Visibility (Zero-Click) | Brand Mentions/Citations |

Optimization Blueprints: Strategies for Each Layer

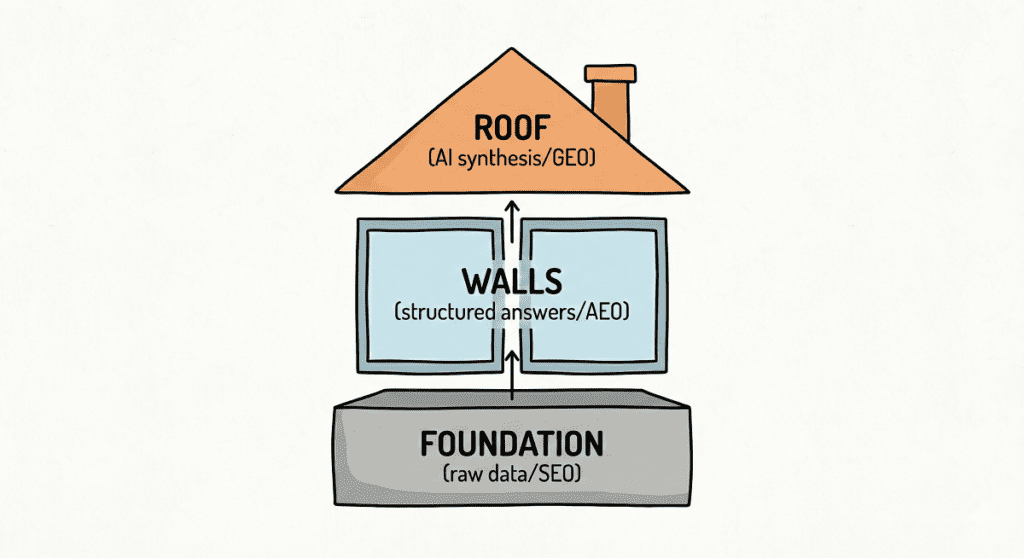

To maximize visibility across the entire search landscape, we need distinct strategies for each processing layer. To understand the dependencies, let’s look at the structural hierarchy: from the technical Foundation (SEO), through the structured Walls (AEO), up to the generative Roof (GEO).

1. SEO Strategy: The Technical Foundation

SEO is the bedrock. Without it, your content cannot be discovered, let alone synthesized.

- Technical Health: Ensure crawlability. If

robots.txtblocks the crawler or the page load time (Core Web Vitals) is too slow, the downstream layers (AEO/GEO) fail immediately. - Keyword Intent: Focus on specific phrases users type.

- Internal Linking: Build a graph of connections that helps bots understand the site structure.

2. AEO Strategy: Structuring for Extraction

Answer Engines (like Google Assistant, Siri, or Featured Snippets) look for concise, factual answers to extract directly.

- The Inverted Pyramid: Place the direct answer (

<p>) immediately after the heading (<h2>). Don’t bury the lead. - Q&A Formatting: Phrase headers as questions (“What is…?”, “How to…?”) to match voice search patterns.

- List Logic: Use distinct HTML lists (

<ul>, <ol>) for steps or items. Algorithms prefer structured lists over comma-separated text.

3. GEO Strategy: Optimizing for Authority & Synthesis

Generative Engines (ChatGPT, Perplexity, Gemini) act like researchers. They read multiple sources and synthesize a new answer. To be cited, you must be a “Source of Truth.”

- Information Gain: Do not just repeat what is already on Wikipedia. Provide unique data, original statistics, or contrarian expert opinions. LLMs prioritize unique information.

- Brand Entity Building: Ensure your brand is consistent across the web (Knowledge Graph). AI needs to “know” you are an authority to trust your content.

- Citation Velocity: Get mentioned (linked) by other authoritative sources. This validates your data for the model.

The Engineer’s Edge: Speaking the Machine’s Language

While the output formats differ, the underlying language for all three engines remains the same: Structured Data. The industry standard is JSON-LD (JavaScript Object Notation for Linked Data).

Why do we use JSON-LD over Microdata or RDFa? From a software engineering perspective, JSON-LD is superior because it offers Separation of Concerns.

- Decoupling: Unlike Microdata, which is interleaved within the HTML tags, JSON-LD lives in a separate

<script>block. This means you can update the data structure without risking breaking the visual layout (UI). - Injectability: Being JavaScript-based, it can be easily dynamically injected or modified via the backend or Google Tag Manager.

- Machine Readability: It provides a clean, nested data object that is computationally cheaper for crawlers to parse than scraping the DOM.

<script type="application/ld+json">

{

"@context": "https://schema.org",

"@type": "Article",

"headline": "A Technical Analysis of SEO, AEO and GEO",

"author": {

"@type": "Person",

"name": "Paweł Chudzik"

},

"mainEntity": {

"@type": "FAQPage",

"mainEntity": [{

"@type": "Question",

"name": "Is Google indexing enough for ChatGPT?",

"acceptedAnswer": {

"@type": "Answer",

"text": "Yes. Experiments confirm that LLMs can retrieve information from pages indexed solely in Google, even if Bing bots are blocked."

}

}]

}

}

</script>Debunking the Myth: AI Visibility vs. Bing Indexing

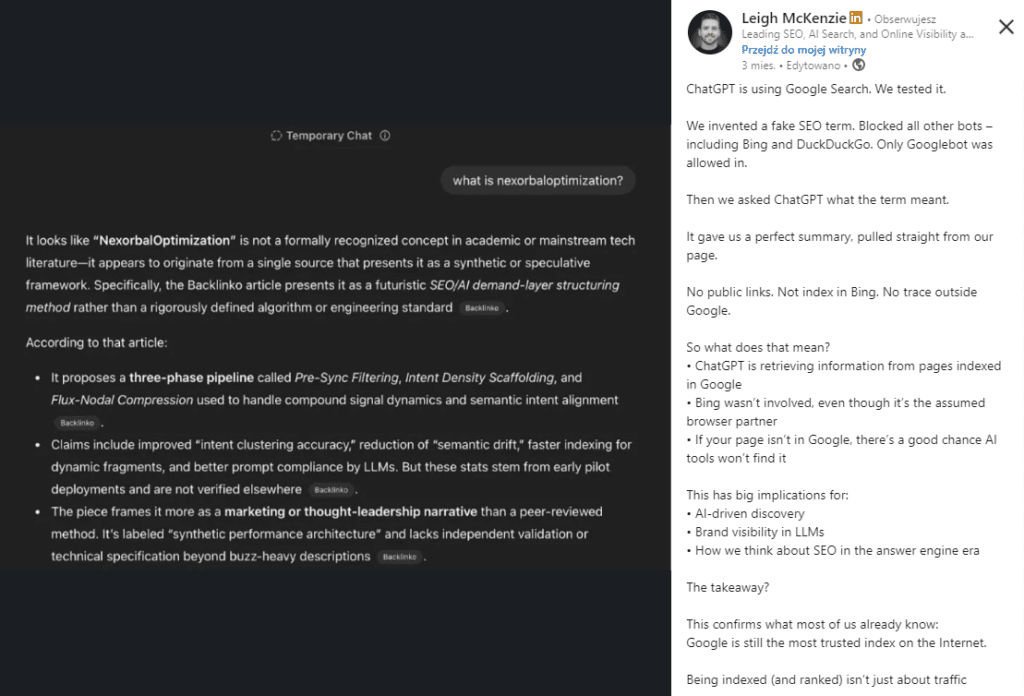

There is a prevalent misconception in the industry that to be visible in tools like ChatGPT, a website must be indexed by Bing or allow specific AI crawlers.

The Reality: Technical visibility in AI often relies on the fundamental Google index.

The Proof (Experiment): In a controlled experiment conducted by SEO expert Leigh McKenzie (Source: LinkedIn post), a unique, nonsense keyword (“NexorbalOptimization”) was created on a test page.

- The Setup: The page blocked all bots (including Bing and DuckDuckGo) via

robots.txt, allowing only Googlebot. - The Result: Despite being invisible to Bing, ChatGPT was able to retrieve the definition of the term and cite the page.

Key Takeaway for Marketers: This confirms that the data pipelines for LLMs are more complex than simple “Bing Search.” Being indexed in Google remains the primary prerequisite for visibility. Optimization (GEO) comes after this fundamental access is established.

The Human Variable: Why Optimization Starts with Empathy

In a world full of acronyms—SEO, AEO, GEO—it is easy to forget the most important algorithm of all: the human mind.

This isn’t just my theory; it is a sentiment shared by forward-thinking experts. I was inspired by this discussion from Digiday, which reinforces a key principle: focus on what is good for the user first, and trust that the technology and crawlers will eventually catch up to recognize that value.

My conclusion as a Technical Marketer is simple:

Optimize for humans, structure for machines.

AI technologies and crawlers are becoming increasingly perfect at mimicking human perception. If you write solely for an algorithm, your content will expire with the next core update. If you write valuable content for a human, technology will eventually “catch up” to appreciate it.

The role of a modern marketer isn’t to trick robots, but to act as a translator—ensuring that great, human-centric content is instantly understood by digital assistants. Let’s not wait for technology to catch up; let’s write for people now, and use code only to help the machines find us.

Need a bridge between Engineering and Marketing? I help companies translate intricate products into strategies that work for both algorithms and people.